We present 3DSRBench, a new 3D spatial reasoning benchmark that significantly advances the evaluation of 3D spatial reasoning capabilities of LMMs by manually annotating 2,100 VQAs on MS-COCO images

Explore

Explore data from huggingface.co. Demo coming soon...

3DSRBench

3D spatial reasoning is the ability to analyze and interpret the positions, orientations, and spatial relationships of objects within the 3D space. This allows models to develop a comprehensive understanding of the 3D scene, enabling their applicability to a broader range of areas, such as autonomous navigation, robotics, and AR/VR.

We present the first comprehensive 3D spatial reasoning benchmark, 3DSRBench, that features 2,762 manually annotated 3D spatial reasoning questions on diverse and open-vocabulary entities, including rigid objects, humans, animals, and implicit concepts, such as logo on a car or arrow on a billboard.

Scope of 3DSRBench. With 3DSRBench we hope to enable the following: (1) a robust and comprehensive evaluation of 3D spatial reasoning capabilities of state-of-the-art LMMs; (2) studying the robustness of 3D spatial reasoning capabilities w.r.t. common and uncommon camera 6D viewpoints, which is a crucial ability when deployed to downstream tasks in embodied AI and robotics; and (3) a diagnosis benchmark to study the 3D awareness of visual encoders and reasoning abilities of LLMs, shedding light on downstream tasks that build on 3D spatial reasoning, such as automatic navigation and robotic manipulation.

Dataset splits. Our 3DSRBench consists of three splits, a real with 2,100 VQAs on MS-COCO images and two synthetic splits with VQAs on multi-view images rendered with "common" and "uncommon" camera 6D viewpoints of the same 3D scene. We define "common" camera viewpoints as ones positioned at the eye level with natural viewing angles, which are well populated in common image datasets, and others as "uncommon" viewpoints

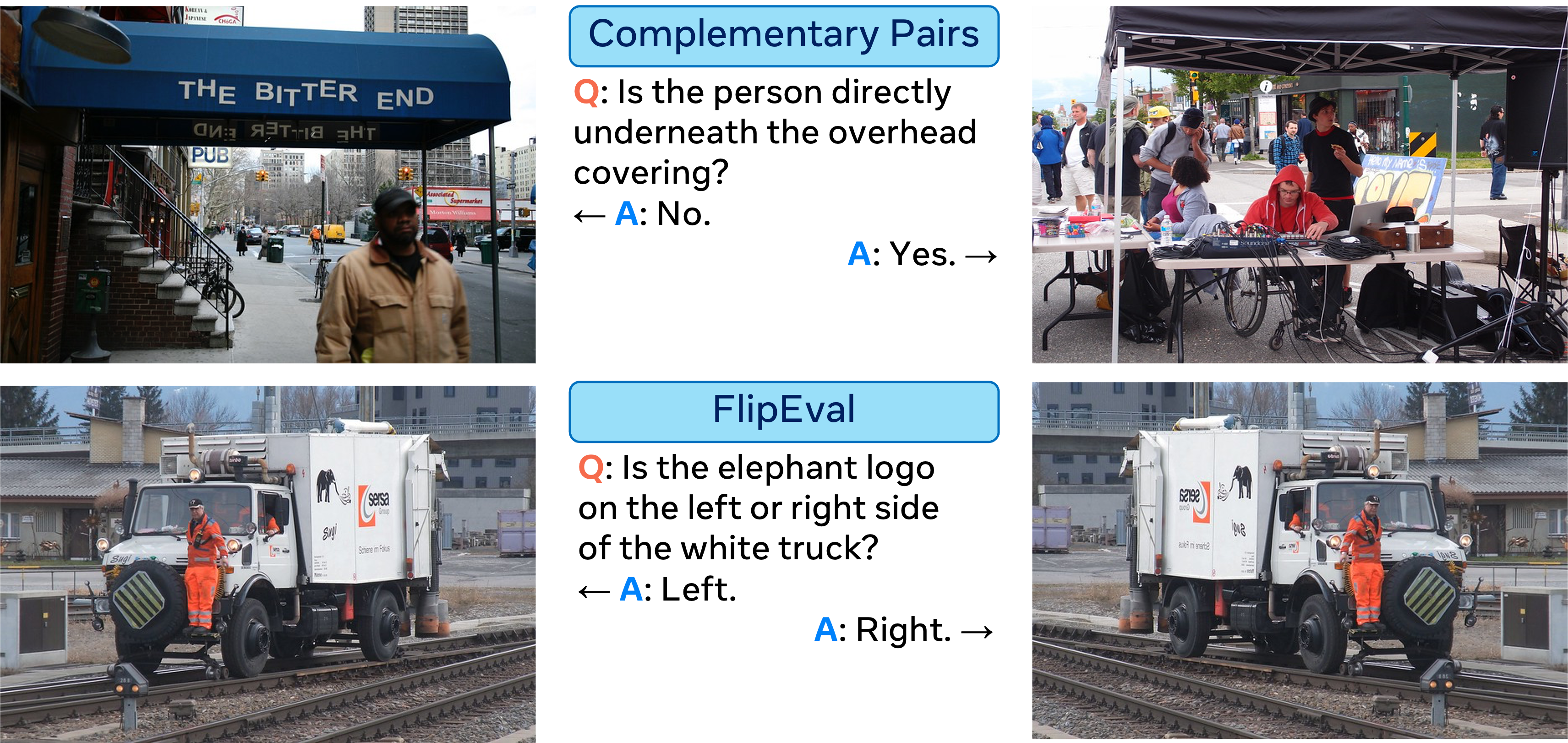

Design considerations. (See Figure 3.) As a manually annotated dataset, our 3DSRBench incorporates the following three key designs: (1) we avoid questions with trivial answers; (2) we adopt a balanced data distribution in various aspects, removing priors in the answer distribution, e.g., pedestrians are often located lower than street lights, or the fact that objects higher in 3D space are also higher in 2D image plane; and (3) robust evaluation strategies, such as CircularEval

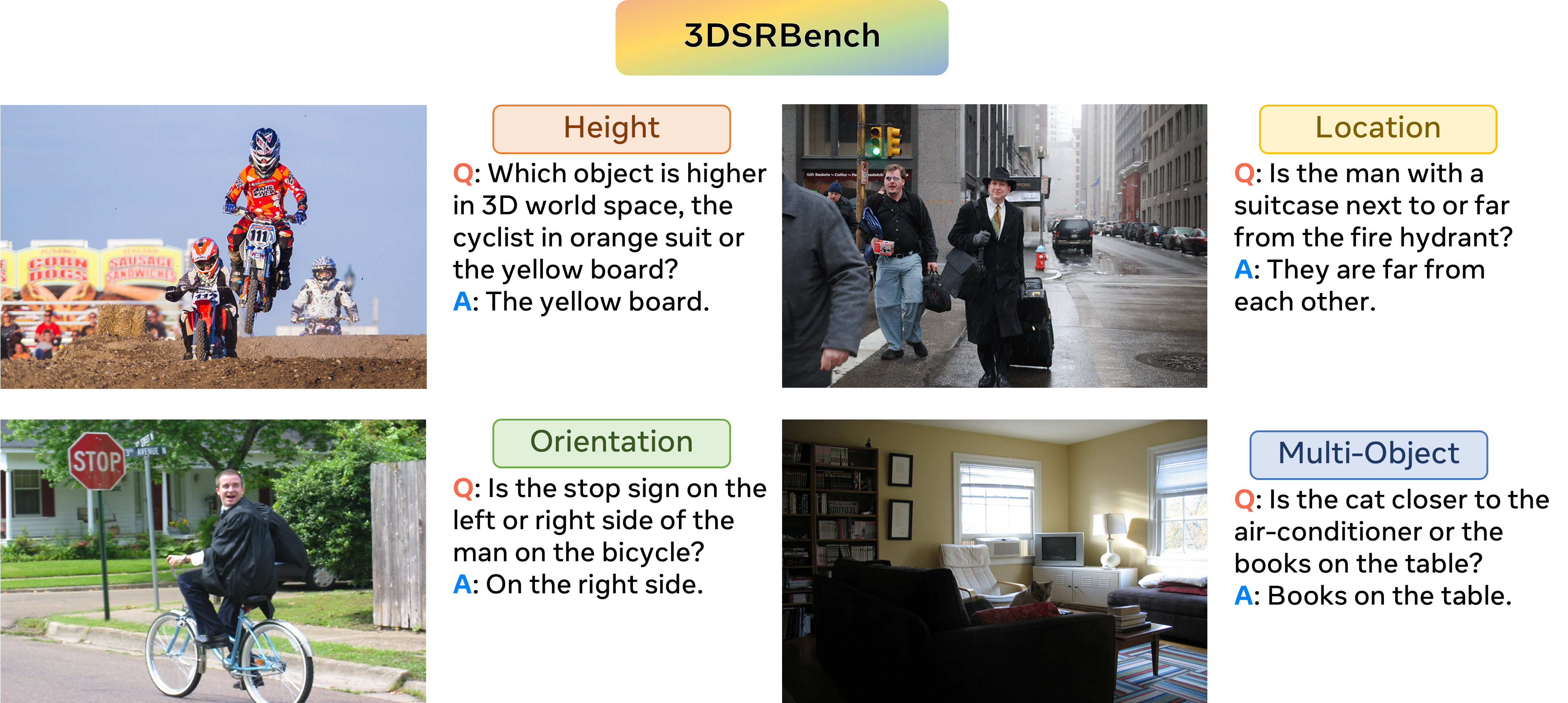

Question types. (See Figure 1.) Our 3DSRBench consists of 12 subtypes of questions from 4 main categories, i.e., height, location, orientation, and multi-object reasoning. Each category of questions focuses on different combinations of 3D properties, such as object 3D location, 3D ground plane, camera extrinsic calibration, and/or object 3D poses.

Key Findings

State-of-the-art LMMs demonstrate limited 3D spatial reasoning capabilties.

| Model | 3DSRBench-real | |||||||

|---|---|---|---|---|---|---|---|---|

| Overall | Height | Location | Orientation | Multi-Object | ||||

| Baselines | ||||||||

| Random | 20.9 | 25.0 | 25.0 | 16.8 | 20.1 | |||

| Random++ | 45.8 | 50.0 | 50.0 | 41.7 | 45.0 | |||

| Open-sourced | ||||||||

| LLaVA-v1.5-7B |

36.8 | 38.5 | 46.4 | 27.7 | 31.8 | |||

| Cambrian-1-8B |

44.1 | 25.6 | 57.0 | 36.5 | 43.1 | |||

| LLaVA-NeXT-8B |

49.6 | 50.6 | 62.7 | 36.8 | 43.6 | |||

| Proprietary | ||||||||

| Claude-Flash | 39.2 | 39.8 | 59.9 | 13.2 | 33.6 | |||

| Claude-Sonnect | 46.9 | 49.6 | 60.0 | 32.8 | 41.2 | |||

| Gemini-Pro | 49.1 | 50.8 | 62.9 | 37.5 | 41.3 | |||

| GPT-4o-mini | 39.1 | 42.1 | 51.8 | 23.4 | 34.6 | |||

| GPT-4o | 45.3 | 49.4 | 62.3 | 23.0 | 40.1 | |||

State-of-the-art LMMs exhibit signifcantly degraded 3D spatial reasoning performance when generalize from "common" to "uncommon" camera 6D viewpoints.

| Model | Camera 6D Viewpoints | |||||||

|---|---|---|---|---|---|---|---|---|

| Common | Uncommon | Change | ||||||

| Baselines | ||||||||

| Random | 20.9 | 20.9 | +0.0% | |||||

| Random++ | 45.8 | 45.8 | +0.0% | |||||

| Open-sourced | ||||||||

| LLaVA-v1.5-7B |

42.0 | 38.0 | -9.5% | |||||

| Cambrian-1-8B |

48.1 | 39.9 | -17.0% | |||||

| LLaVA-NeXT-8B |

45.5 | 36.8 | -19.1% | |||||

| Proprietary | ||||||||

| Claude-Flash | 44.6 | 37.7 | -15.5% | |||||

| Claude-Sonnect | 47.4 | 39.4 | -16.9% | |||||

| Gemini-Pro | 59.9 | 49.5 | -17.4% | |||||

| GPT-4o-mini | 46.5 | 40.3 | -13.3% | |||||

| GPT-4o | 51.2 | 44.3 | -13.5% | |||||

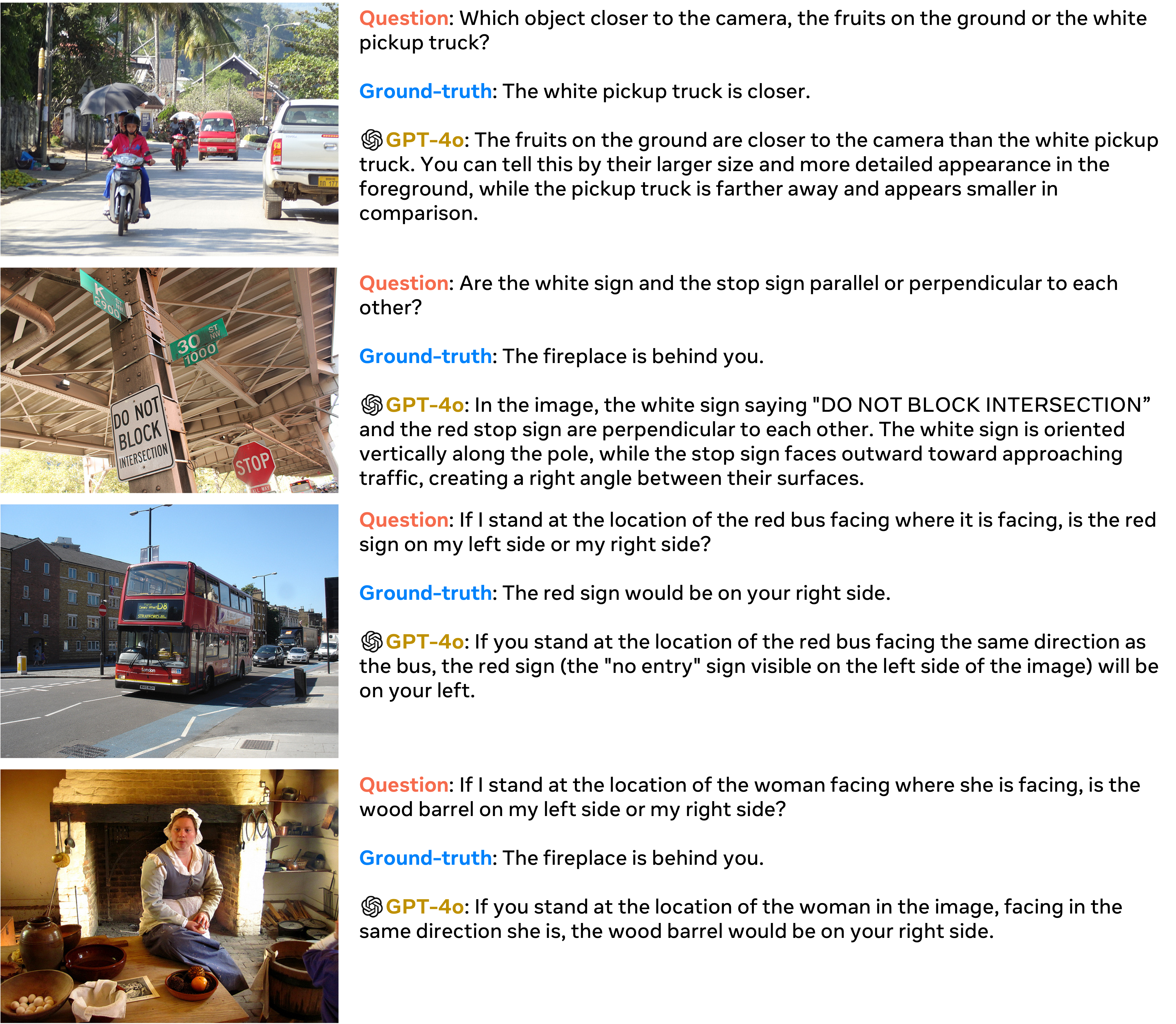

Failure cases of GPT-4o on our 3DSRBench dataset.

Miscellaneous

License. Our 3DSRBench is released under the Creative Commons Attribution 4.0 license. By accessing and using our 3DSRBench, you agree to follow the terms of access specified here.

Ethics. We follow the ethics guidelines at Johns Hopkins University and obtained Institutional Review Board (IRB) approvals prior to the start of our work. We described potential risks to the annotators and explained the purpose of the study and how the collected data would be used. All annotators agreed to join this project voluntarily and were paid by a fair amount as required at our institution.